Revolutionizing Legal Research with Generative AI: Harnessing Retrieval Augmented Generation and Web Search

Making Your Searches and Citations More Accurate

Undoubtedly, you've noticed the transformative impact of generative AI (GenAI) on how legal professionals tackle research, drafting, and documentation. You are likely aware that using GenAI can be problematic, as GenAI tools can produce hallucinations. Hallucinations are currently inevitable and have led to problems for legal professionals. Even the best AI tool can still produce hallucinations.

A hallucination refers to an instance where the AI generates incorrect, misleading, incomplete, or wholly fabricated information. Hallucinations can occur when the AI tool fills in gaps or confidently asserts false details as if they were factual. These errors happen because the model is “probabilistic,” meaning it predicts what comes next in a sequence of text based on patterns but may produce content that sounds plausible without having a factual basis.1

For example, in legal practice, an AI tool might fabricate case citations or statutes that don’t exist, generate responses that mix up legal precedents, and mix up the party’s arguments for the court’s ruling—potentially leading to serious errors if the information isn't cross-checked.

Reducing hallucinations is important for ensuring that AI outputs are reliable, particularly in professional fields like law, where accuracy is critical. Retrieval Augmented Generation (RAG) can minimize hallucinations by accessing the most current information. Count that as a win for busy lawyers.

Retrieval Augmented Generation: Enhancing AI with Targeted Information Retrieval

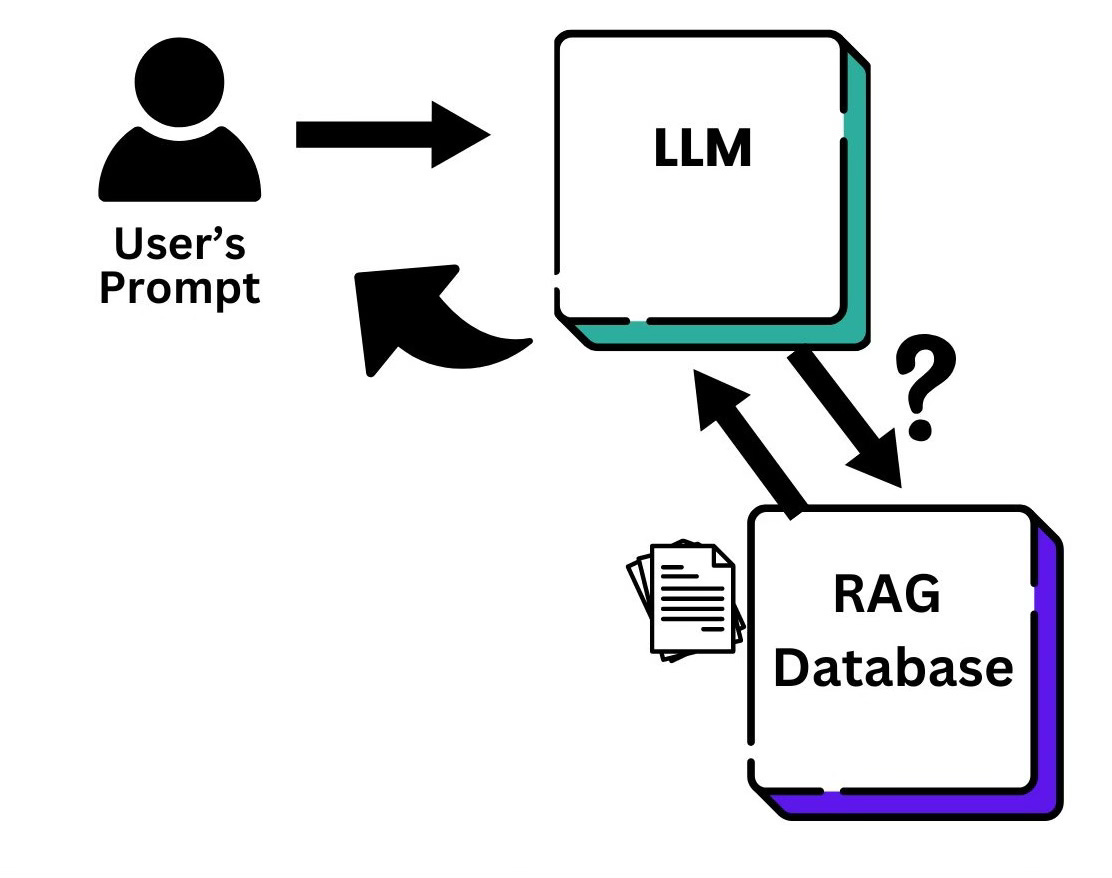

Retrieval Augmented Generation, or RAG, is a technique that combines the generative capabilities of AI with the power of information retrieval. In practice, RAG enables an AI model to dynamically access a database of information during the generation process, allowing it to pull in relevant facts, figures, and contexts to support its outputs.

For legal professionals, an AI tool equipped with RAG can augment its responses with precise references from a curated legal database, such as case law, statutes, scholarly articles, or your firm’s database or motion bank, significantly enhancing the accuracy and relevance of its outputs.

This approach addresses one of the traditional limitations of AI: the reliance solely on the data it was trained on. By integrating real-time retrieval, AI models can provide answers that reflect the latest legal precedents and doctrines, making them invaluable partners in legal research and analysis. However, this convenience is not without a cost. If you are going to use RAG, you must be willing to invest in creating and curating the database. All the new cases must be added. Likewise, any new laws, court rules, or changes in standard language in motions, contracts, or other legal documents must be updated. For large companies and law firms, this can be solved by having one or more staff members constantly updating the database. For sole practitioners or small firms this is likely too onerous. But you can hire a freelancer to create a RAG database, and using the API of an LLM model such as ChatGPT, can connect the two. Or, there are companies dedicated to providing curated databases.

Web Search Capabilities: Accessing the Latest Information

The capability of some LLMs like GPT-4o, or Gemini to perform web searches introduces an additional layer of relevance and timeliness. This feature allows the AI tool to incorporate the most current online information into its outputs, extending beyond its original training data. For the legal field, where regulations and case law are continually evolving, this ability improves the advice and documentation prepared with the help of AI.

When a legal professional poses a question or requests document preparation assistance, the AI can execute a web search to find and integrate the most recent and relevant legal developments, guidelines, or news articles. This feature is particularly valuable in areas of law that experience frequent changes, such as technology, intellectual property, and international law.

On the downside, this requires you to view and read the information the AI model found for you. So, although it may save you time and embarrassment by alerting you to the newer information, you will still need to digest it and incorporate it into your document. More importantly, subscription and specialized sites such as FastCase, Westlaw, CaseText, and Lexis will block searches by non-subscribers. This leaves the web searches performed by LLMs likely relying on weak and unbeneficial sites. However, some free legal research sites exist, such as George Mason University’s Antonin Scalia Law School library, Cornell Law School’s Legal Information Institute (LII), and Google Scholar Case Law. The most important thing is that you understand the limits and capabilities of the sites you are relying on and supplement the legal research if necessary.

Doing RAG Right

To create a useful RAG database, you must invest in high-quality content and maintain it to ensure that it stays updated and accurate. A RAG database will help keep your GenAI output relevant and precise. However, that benefit vanishes if your RAG database isn't kept current. Keep in mind that the data and information in your RAG database is used by AI, documents with additional, irrelevant content will impact the quality of results.

If you only have a small number of documents you need in your RAG database, you can get the benefits of a RAG database by creating a custom GPT. Or several custom GPTs. You can easily set up a custom GPT using ChatGPT. This video shows you how in less than 10 minutes.

Ensuring Valid Outputs: Best Practices for Legal Professionals

To effectively take advantage of these advanced AI features in legal practice, professionals should:

1. Choose AI Tools Wisely: Opt for AI tools that explicitly feature Retrieval Augmented Generation and web search capabilities. These features greatly enhance the tool's ability to provide accurate, relevant, and up-to-date information. And there are so many ways to use RAG to customize your output and make it more meaningful.

2. Understand the Scope and Limitations: This is always a good rule. No matter what type of assistance you use, whether your human legal assistant, your word processing program, or a legal-specialized GenAI model such as CoCounsel, Spellbook, or Harvey, you must understand that particular assistant’s capabilities and limitations. Relying on a legal AI tool whose capabilities you do not understand is like asking a new legal assistant to draft a motion for summary judgment that is needed on a very short timeline without having any idea whether they even know the rule of civil procedure upon which it should be based. Test out your AI system. If you opt for a Legal Assistant AI, ask as many questions as possible of your account representative. Then, set up a demo so you can test it out. Even with an AI system that has advanced features, you must critically assess your output. Familiarize yourself with the AI tool's data sources and the extent of its web search capabilities, including any limitations in accessing paywalled or specialized databases. One of my favorite things about GenAI models is that you can train them to write in your style.

3. Verify and Cross-Reference: “Trust but verify” is a proverb I learned early in my law career. Unfortunately, I learned it the hard way. The partner gave me a statement from our client about how things went down in a contract dispute between her and the opposing party and asked me to draft a motion for summary judgment. I trusted the statement. I had no reason not to trust. I was inexperienced and a bit naïve as I spent hours drafting. While reviewing the evidence and gathering supporting information, I found several inaccuracies in the information from the client. I was worried that the client seemed disingenuous. When I brought this up with the partner, he laughed and said, “Of course, that was just her perception of the events. With your clients, always trust, but verify.”

And so began a philosophy I still abide by today, in law and life. You start by trusting everything that your client says. Then, you put in the work to verify the accuracy of everything you are going to rely on. The same holds for AI-generated work products. Always verify AI-generated information by cross-referencing with authoritative sources. RAG and web search capabilities can significantly enhance the model's outputs, but the ultimate responsibility for accuracy and compliance lies with you as the attorney. Remember this critical point: Failing to verify the output you receive is, at best, irresponsible and, at worst, negligence and malpractice.

You’ve likely heard of the Massachusetts lawyer sanctioned for using false citations in his brief. When Judge Brian Davis questioned the lawyer about the citations of cases he could not find, the lawyer responded that one of the assistants in his office had used a GenAI tool. In finalizing the motion, he reviewed the citations for style but did not look up every case. The lawyer quickly owned up to the mistake. Judge Davis was lenient, only sanctioning him $2,000, and graciously attempted to keep the lawyer’s name out of the press. The judge stated that his ruling was more of a caution to the legal profession and the Massachusetts Bar, not just a retribution against one lawyer. But I predict that judges will not continue to be so gracious and lenient. We, as lawyers, should know better by now. Rule 11 sanctions will begin to reflect the impatience and frustration of the court when the motions and legal filings contain false information, even if it was not intentionally false. If you use this technology, you must commit to verifying all of the output.

4. Stay Informed on AI Developments: AI is rapidly evolving. Staying informed about the latest advancements and updates to AI tools can help legal professionals continue to improve the accuracy and relevance of their work. One way to do that is to like, follow, and subscribe to this newsletter – AI for Lawyers.

5. Maintain Transparency and Ethical Considerations: When utilizing AI-generated outputs, especially those enhanced by web searches or retrieval techniques like RAG, it's crucial to be transparent with clients and stakeholders about the use of AI and the steps you are taking to ensure accuracy. The courts have sanctioned some lawyers and ungraciously publicly embarrassed them for including cases in a motion fabricated by ChatGPT. It's essential to have an AI policy for your firm and to train every employee on the AI policy. At a minimum, an assistant not involved in drafting the legal filing must verify the accuracy of every legal citation. Being transparent with clients about the use of AI in your practice is also crucial. Here are some suggestions on how to handle it.

Conclusion

Generative AI, equipped with Retrieval Augmented Generation and perhaps bolstered by web search capabilities (currently available in some AI models), combines the technological advancements of GenAI with the precision of RAG. RAG improves the model's capacity to produce relevant and accurate outputs that reflect the most recent legal developments. By understanding and strategically utilizing these capabilities, you can significantly enhance your practice, and greatly reduce hallucinations, ensuring that you remain at the forefront of legal research and analysis while upholding the high standards of accuracy and reliability required in the legal profession.

This is a core aspect of how Generative AI models work, closely tied to their underlying algorithm. Specifically, most modern GenAI models use a technique called next-token prediction, where the algorithm predicts the next word or token in a sequence based on patterns identified from large amounts of data during training. The algorithm does not “understand” the facts — it is simply a prediction machine. Since LLMs are trained on vast amounts of data, including fiction, falsehoods, and other mistakes, they can make an incorrect prediction that sounds reasonable.