Top Ten Ways to Eliminate or Reduce AI Hallucinations: A Guide for Lawyers

A David Letterman Twist on the Best, and Worst, Ways to Prevent Citing Fake Cases, for Lawyers

David Letterman, TV show host of his own late-night show that aired so long ago that many of you won’t recognize it, used to do a nightly countdown. He would start in reverse order from #10 to #1, saving the best for last. I remember his “Top Ten Stupid Human Tricks” and his “Top Ten Things That Almost Rhyme with Peas.” He was humorous, clever, and occasionally insightful. Because of his offbeat approach to dumb and inane situations, it seems like the best way to deal with the agonizingly astonishing number of hallucinated case citations that we’re seeing these days.

In a nod to Letterman, I’m presenting this article, “David Letterman’s Top Ten Things”-Style.

I’ve ranked these tips by their effectiveness, along with ease of use and implementation as a secondary consideration.

Number 10 – Fine-tune Output Expectations

This is a fancy way of saying, tell your AI tool not to make things up. Just good prompting practices. But it does help. It essentially gives your AI tool permission to give up if it cannot find a plausible answer.

How it works in legal practice:

When prompting, include the phrase, “do not make things up” OR

“Only respond with a case name or statute if you are certain it exists. Otherwise, respond ‘I don’t know.’”

Why it reduces hallucinations:

Large language models are probabilistic systems trained on vast amounts of text to produce the most likely next word or phrase in response to a prompt. Their default behavior is to provide an answer—even when none exists—because saying "I don't know" was comparatively rare in their training data. So unless explicitly instructed otherwise, the model will guess, at times fabricating:

Nonexistent cases or statutes that sound plausible.

Incorrect legal reasoning based on surface similarities.

Misleading factual assertions presented with confidence.

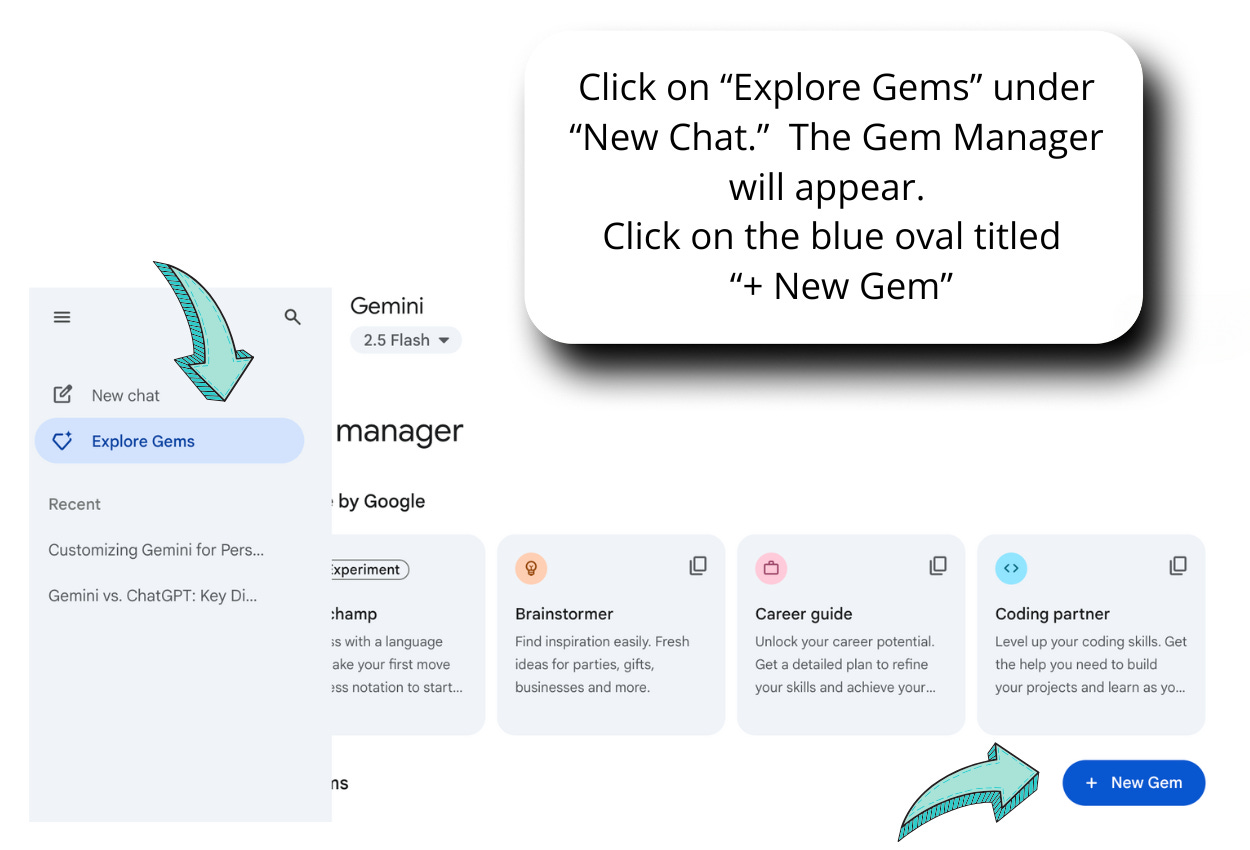

Drawbacks: Accuracy

Prompting this way is just good practice. And it is very easy to implement—just add in the above language to any prompt where you need a valid, non-hallucinated output. You can even customize some GPTs, giving them baseline instructions that will guide your experience with AI tools. Here is an image indicating how to customize ChatGPT and another for Gemini.

This does not eliminate all hallucinations, It’s ranked #10 because it’s easy to use, but lacks accuracy.

To Customize ChatGPT

To Customize Gemini

Number 9 — Use a RAG Database

RAG (Retrieval-Augmented Generation) is one of the most effective ways to ground AI responses in verified legal sources. Instead of relying solely on the AI's training data, RAG systems first search a curated database of legal documents, then use those specific sources to generate responses.

How it works in legal practice:

Create a database of your firm's relevant case law, statutes, regulations, and precedents.

When you query the AI, it first retrieves the most relevant documents from your database.

The AI then uses these specific, verified sources in addition to general training data.

Citations and legal principles can be traced back to documents in your controlled database.

Why it reduces hallucinations:

RAG dramatically reduces fabricated citations because the AI primarily references documents that actually exist in your curated database. It's like giving the AI a law library to work from instead of asking it to recall legal information from memory. Essentially, you’re creating your own law library.

Drawbacks: Price

RAG databases are great, and very useful for a number of situations. You will very significantly reduce hallucinations with a RAG database. However, it’s ranked at number 9 because a RAG database can be expensive to set up and pricey to maintain. Unless you are or your best friend is a “tech-nerd” you’ll need to hire a technologist to set this up for you. Because the law is always changing and new cases are being decided, you’ll need to keep the database updated. There are not insignificant maintenance costs.

Number 8 — Run a Second LLM (or Specialist Validator), or Use Self-Consistency

Use multiple AI models to cross-check critical outputs, especially for case law research or complex legal analysis. Have one model generate the research, then use another to verify the citations and reasoning. Alternatively, ask the second model to approach the question from different angles and compare the consistency of responses. When one AI cites a case, have another AI verify it from a different angle, catching inconsistencies and errors that a single model might miss.

Alternatively, you may ask one LLM the same question many times, and then you can compare the output. You still need to verify its output.

How it works in legal practice:

Subscribe to two AI tools.

Use one AI model (like GPT-4) to generate initial legal research, then use another model (like Perplexity) to fact-check the citations and reasoning.

OR

Ask the same model to answer your legal question multiple times using different approaches, then compare results for consistency.

Deploy specialized legal AI tools to validate outputs from general-purpose models.

Question every output you receive, asking the AI model to double-check itself or to argue against itself.

Why it reduces hallucinations:

Multiple AI models trained on different datasets are unlikely to make identical errors. When two independent AI systems agree on legal conclusions and citations, you have much higher confidence in the accuracy.

Drawbacks: Price, Time, Accuracy

This method requires you to have two different AI tools. Because you should use free AI tools only for things of no consequence, this method can feel unnecessarily pricey, paying for two separate AI tools. Especially in light of the fact that hallucinations will still be possible. And there are other costs in time and effort. Once you have both subscriptions you need to run all of your output into a second tool. For example, you’ve drafted your motion, and want to run it through an AI tool. You review all the output, and then run it through a second tool. You then must review all of that information and compare the two. After all of that, there still may be hallucinations. And that’s why this is #8 on this list.

Number 7—Use Temperature Controls and Deterministic Settings

Lower the model's "temperature" settings. High "temperature" settings encourage creative, varied responses - perfect for brainstorming but dangerous for factual legal research. Low temperature settings produce more consistent, conservative outputs ideal for citation-heavy work. If I had to guess, I would say that Grok has a higher temperature setting than Claude, for example. Check out the different “personalities” of AI tools here.

When you need factual, precise legal information you can adjust your temperature accordingly.

How it works in legal practice:

Set temperature to 0.1-0.3 for case law research, statutory interpretation, and citation work.

Use temperature 0.7-0.9 only for creative tasks like brainstorming legal arguments or drafting initial outlines.

Enable deterministic or "seed" settings when you need identical results across multiple queries.

Configure your AI tools to default to conservative settings for legal research tasks.

You could even set up different AI "profiles" - one conservative for research, one creative for drafting.

Why it reduces hallucinations:

Lower temperature settings make AI more likely to stick to well-established patterns from its training data rather than generating novel (potentially incorrect) legal information. When the AI is less "creative," it's less likely to invent case citations or legal principles. Lowering the temperature settings is like telling your AI tool to be less creative and more accurate.

Drawbacks: Lack of Access

On many AI tools you cannot change the temperature setting. Tools like ChatGPT state that they change the temperature settings based on the user’s case or prompt, but you are required to take their word for it. Plus, it can be wearing to always remember to change the temperature settings up and down.

Number 6 — Forced Citations and Auto Link-Ping

Force the AI tool to provide specific citations for every legal claim. When prompting, tell the AI tool to provide legal statements or legal conclusions only with a citation to a real case, with a live link.

You can then write a simple computer script to automatically verify those citations exist by pinging the link. (Note: if you’re not certain what I just said, this is likely not the technique for you.). This technique combines prompt engineering with technical verification to catch fabricated cases before they reach your work product.

How it works in legal practice:

Structure your prompts to require citations in a specific format: "Provide the case name, citation, year, and relevant page numbers for each legal principle."

Require your AI tool to give you a live link to the case, then check the link.

Require AI to quote specific language from cases, not just paraphrase holdings.

Use citation verification tools that automatically "ping" legal databases to confirm cases exist.

Why it reduces hallucinations:

When AI knows it must provide verifiable citations, it becomes more conservative and precise. The automatic verification step catches the most dangerous type of legal hallucination - fabricated case citations that could embarrass you in court or with clients.

Drawbacks: Accuracy

This is a pretty simple solution to implement. You use good prompting techniques (check out good prompting guidelines here). And you combine that with a simple script. It can feel a bit daunting if you’re not familiar with running scripts, or good prompting protocols. Prompting protocols are easy to change. But I know from personal experience that even high quality prompting will not stop an AI from hallucinations.

Number 5 — Feed the Model the Controlling Authority in the Prompt (The "One Good Case" Method, AI-Style)

When I was in law school, they taught us as 1L’s to use the “one good case” method of legal research. Do law schools still teach the “one good case method”? In case they don’t, here’s how it goes: you find a good case. Squarely on point, or at least as much as possible. We could then use Headnotes to find additional useful, relevant cases. The idea was that once you’re on the right track, you’re more likely to find what you’re looking for. The same idea applies when using your AI tool for legal research.

Instead of asking AI to find relevant law from scratch, provide the key controlling cases or statutes directly in your prompt. Or at the very least, include one good case that is on point. This grounds the AI's analysis in verified legal authority, reducing the temptation to fabricate supporting citations. It’s essentially one-shot or few-shot prompting.

How it works in legal practice:

Research and identify the one or two most relevant controlling cases for your legal issue.

Copy and paste the key text and citations from these cases directly into your AI prompt.

Ask the AI to analyze your facts against this specific legal framework.

Ask the AI tool to find new cases similar to the case or cases you included in the prompt.

Why it reduces hallucinations:

When you give the AI the controlling legal authority upfront, you are grounding it, and giving it an example of what you want. This will focus the tool’s attention to find more similar, and hopefully real, cases. Thus dramatically reducing the risk of citing non-existent cases.

The best use case for this is when you are referring to a statute, or responding to opposing counsel’s motion. You can give your AI tool the statute or cases, and it will be more likely to give you additional or adverse cases that are not hallucinations.

Drawbacks: Accuracy, Time, Access

If you were finding one good case, you could likely find more. This method is not for those who are hoping to avoid all legal research. And if you use a legal-specific tool to find your first case, there is no need to have this method because you can just keep using your legal-specific tool.

(we never share your information)

Number 4—Automated citator cross-check (Shepard’s, KeyCite, Brief/Quick Check)

Don't just verify that AI-cited cases exist - ensure they're still good law. Integrate automated citator services to check the current validity and treatment of every case the AI references, catching overruled decisions and negative treatment that could undermine your legal arguments.

How it works in legal practice:

Route all AI-generated citations through automated Shepard's (Lexis) or KeyCite (Westlaw) validation.

Set up alerts for any case marked with negative treatment signals (overruled, questioned, criticized)

Use Brief Check or Quick Check tools to scan entire AI-generated documents for citation validity.

Create workflows that flag cases with warning signals for manual attorney review.

Why it reduces hallucinations:

Even when AI correctly identifies real cases, it may not know about recent negative treatment. A case that was good law in the AI's training data might have been overruled last month. Automated citator cross-checking ensures you're not building arguments on shaky legal foundations.

Drawbacks: Price, Time

This is a pretty standard practice for any litigator. Make sure your case is still good law. The difference here is that you can use the accuracy of Westlaw or Lexis, or a lower-cost citation service, but not pay the more expensive price for the AI version of those tools. You can then use the power of your lower price AI model to help find additional cases.

Number 3 — Use Analytical AI Models

Analytical AI models are ones that approach a prompt step-by-step, rather than giving you the first and quickest answer it hits upon. An example of this is ChatGPT’s o3 model, or the Deep Research feature of your AI tool. If you’re not sure if you are using an analytical model, you can still accomplish basically the same thing through prompting. Force the AI to work through legal problems step-by-step using structured, analytical prompts rather than asking for quick answers. This methodical approach prevents the AI from skipping logical steps or fabricating supporting information to reach plausible-sounding conclusions.

How it works in legal practice:

Break complex legal questions into sequential analytical steps that mirror legal reasoning.

Use structured prompts that require the AI to identify issues, state rules, apply facts, and reach conclusions.

Choose AI models known for reasoning capabilities (like Claude, GPT-4's advanced reasoning), or use AI models know for accuracy, such as Perplexity.

Force the AI to show its analytical work rather than jumping to conclusions.

Why it reduces hallucinations:

When AI must work through problems methodically, it's much harder to fabricate supporting information or skip logical steps. The structured approach forces more careful, deliberate searches making it less likely to hallucinate.

Drawbacks: Accuracy

This is an easy fix. It’s using best practices for prompting. But it is not completely accurate all of the time. It is, however, relatively inexpensive, and not unduly burdensome.

Number 2 — Use Legal-Specific AI Platforms with Embedded Case Law

Choose AI tools specifically designed for legal work that have integrated access to verified legal databases. These platforms dramatically reduce hallucinations because they can only reference cases and statutes that actually exist in their connected legal databases.

Note that even legal specific AI tools hallucinate. Perhaps they don’t make up case citations from whole cloth, but they can cite to the incorrect case for the legal proposition you’re trying to cite for.

How it works in legal practice:

Use AI platforms that are directly connected to Westlaw, Lexis, or other verified legal databases.

These tools retrieve actual case text before generating responses, rather than relying on training data memory.

Legal-specific platforms often include built-in citation verification and case law validation.

Many offer real-time access to current legal databases, ensuring up-to-date legal information.

Some examples:

Note that I am not affiliated with any of these brands or their apps. And they are listed in no particular order.

Why it reduces hallucinations:

This method requires the AI tool to find its cases within an accurate, up to date digital library. When AI can only reference verified legal sources, fabricated citations become nearly impossible. Note that I said nearly.

Hallucinations are still possible. Although the legal specific AI tools are far and away less likely to provide fake case, they can still give you the incorrect case for your legal proposition. This can be problematic if you are looking for a case about the Business Judgment Rule and cite a case that has to do with Fourth Amendment Searches. Still, at least you are more likely to just look foolish, and not be recommended to your state bar or be sanctioned.

Drawbacks: Price

Legal-specific tools can be very pricey. If you are tempted to do the least amount of work to find good cases, or if you want to use AI but are not willing to check the output, then the price might be worth it.

And,

Drumroll please . . .

Number 1 – Verify all Output

Check all AI Output. Period. End of Sentence.

Is this too easy? Too common-sensical? I realize it’s about as fun as the suggestion to prevent pregnancy by abstinence. But abide by this rule, or risk hallucinations.

Really, if you are doing this, you need no other techniques. As humans, we default to the road more frequently traveled; to the route that looks easiest, and the path of least resistance. But there is no magic AI tool that will always give you perfect citations.

The drawbacks are just the time and effort it takes to actually verify your AI output, which you should be doing anyway.

This goofy Letterman-style parody seems the best way to deal with the utterly foolish and unnecessary situation we find ourselves in with lawyers and even a judge citing false cases and propositions. So with thanks, and perhaps apologies, to Mr. Letterman for emulating his format and name,

(imagine this in a resonant, TV show host voice . . . )

thank you,

And goodnight everybody!

Best Practices for Legal Professionals:

1. Always, always, always verify all output, and especially all case and legal citations. Always.

2. Use AI as a tool, not a final authority. It is your assistant, not your replacement.

3. Keep your prompts (and your expectations) grounded in the law.

© 2025 Amy Swaner. All Rights Reserved. May use with attribution and link to article.