An Analysis of the EU's AI Act: Implications for the Future of AI Regulation In the US

And Best Practices to Advise Your Client Wherever They Live

Despite Biden’s first and second AI-centered Executive Orders, we in the United States still do not have comprehensive rules and regulations for AI. In this regard, the European Union beat us to the punch. The European Union’s Artificial Intelligence Act (AI Act) is the first of its kind, creating a comprehensive framework for the regulation of artificial intelligence within its borders. At its heart, this legislation is what Sam Altman and “tech bros” have been pushing for for more than two years. This legislation sets the global tone for AI regulation, balancing the need for innovation with public safety and ethical considerations. This analysis examines the provisions of the AI Act, highlights its strengths and weaknesses, and considers how the United States may respond in the absence of a similar federal AI regulation.

Provisions of the EU AI Act

The EU’s AI Act is designed as a risk-based framework, regulating AI systems based on the level of risk they pose to public safety, human rights, and fundamental freedoms. The core provisions of the Act are:

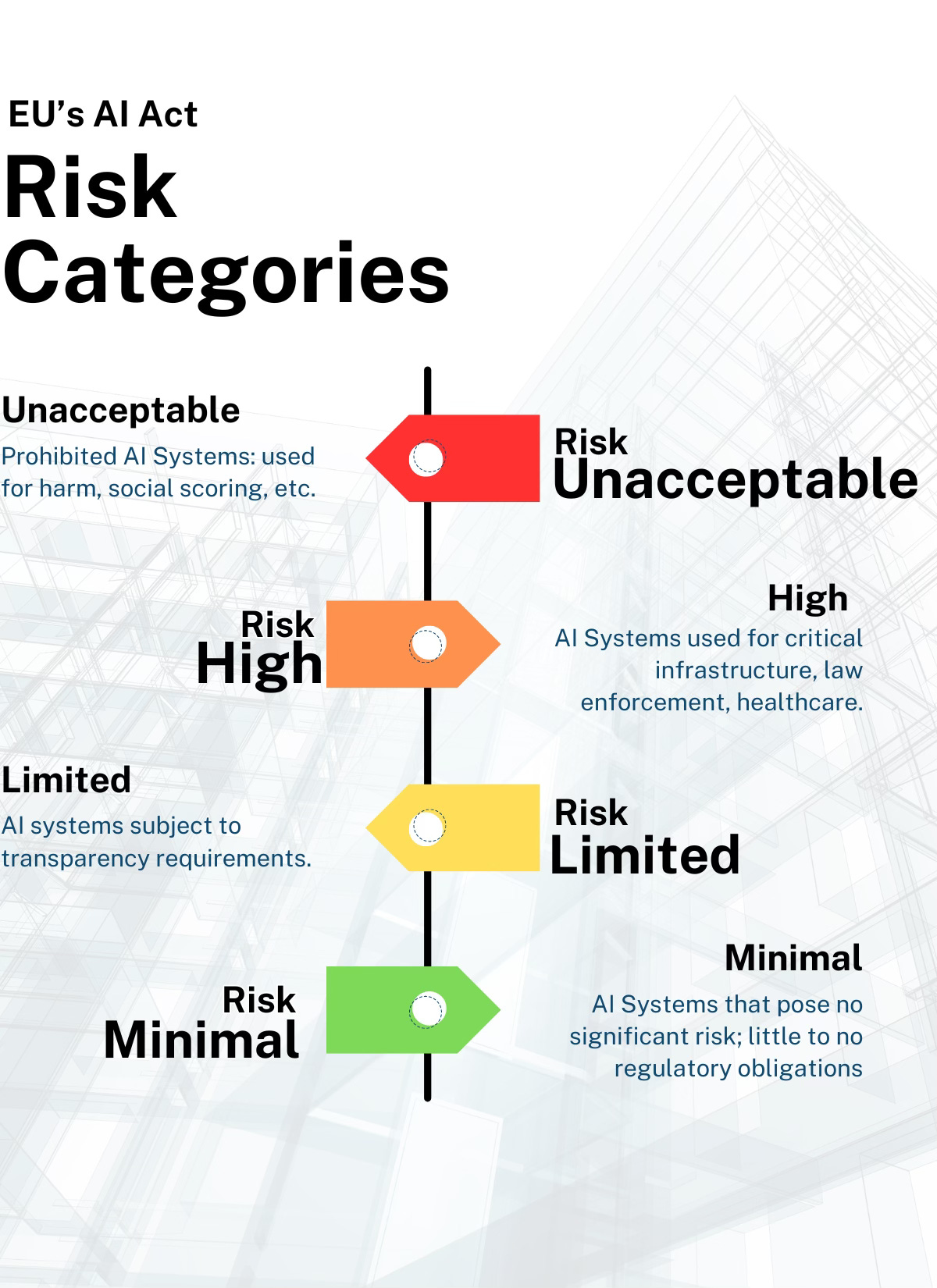

Risk Categories: AI systems are classified into four tiers of risk:

image by Amy Swaner © 2024 Amy Swaner, use with attribution and link

Unacceptable Risk: Prohibited AI systems, such as those used for social scoring or manipulative practices.

High Risk: AI systems requiring strict compliance, including systems used in critical infrastructure, law enforcement, and healthcare. For example, Zebra Medical Vision, a company that uses AI for diagnostic purposes but contains protected personal and health information, would likely fall into this category. Another would be Clearview AI, which uses biometrics to identify persons for law enforcement if used in the EU.

Limited Risk: AI systems are subject to transparency requirements. For example, chatbots or AI-generated content. Under the Act, however, Limited Risk systems would not be required to register. Dialogflow, an AI chatbot, would likely fall into this category if it was used in a customer-facing role in the EU.

Minimal Risk: AI systems that pose no significant risk, with little to no regulatory obligations (e.g., spam filters, video games). Examples include DeepL, an AI-powered language translation app, and Grammarly, an app for improving grammar and writing.

Compliance Obligations for High-Risk Systems: High-risk systems face stringent requirements, including risk management, data governance, transparency, human oversight, and cybersecurity standards. These systems must undergo assessments and obtain certifications.

Prohibited Uses: Systems that manipulate human behavior, exploit vulnerabilities, or perform real-time remote biometric identification in public spaces are banned unless in narrow law enforcement cases. Notably, civil society organizations, such as the Ada Lovelace Institute, advocated for broader bans on biometric surveillance, pushing for the protection of fundamental rights (Ada Lovelace Institute) (Competition Policy International).

Enforcement and Penalties: The Act imposes fines of up to €30 million or 6% of global revenue for breaches related to prohibited AI practices, with lower penalties for other non-compliance issues (CSET).

The Act will take full effect in 2025, giving companies a few more months to align their practices with its provisions.

The Best Aspects of the EU AI Act and Its Likely Benefits to the EU

The EU AI Act presents a bold and forward-thinking regulatory framework with several strengths:

Ethical AI Development and Governance: The Act strikes me as exactly what I would envision from the cultured and highly regulated EU. It ensures AI systems align with European values and fundamental rights. It seeks to promote trust in AI technology by prohibiting systems that pose unacceptable risks to human dignity and mandating transparency. And it attempts to come up with solutions to help spur innovation. Civil society advocates such as the Ada Lovelace Institute have praised the emphasis on accountability, which is expected to foster a more ethical AI ecosystem (Ada Lovelace Institute) (Techzine Global).

Clear Guidelines for Innovation: The Act’s risk-based categorization clearly separates AI companies by the types of information they process, and how it is used. High-risk systems are regulated with strict requirements and mandatory registration, while lower-risk systems have more flexibility, and are not required to register. This structured approach is undoubtedly meant to encourage responsible innovation in sectors like transportation and energy, while tightening down on more invasive or potentially harmful systems. (Lewis Silkin).

Global Leadership in AI Regulation: The Act positions the EU as a global leader in responsible AI governance. Much like the GDPR’s influence on data privacy laws worldwide, the AI Act is expected to shape AI regulations in other regions. This could boost Europe’s AI market and attract investment in responsible AI development (CCN.com), or at the least, it will bolster the image of the EU as a leader in Data Privacy and AI Governance.

Support for Startups: One of the Act's most beneficial provisions is that it offers regulatory sandboxes and test environments for small businesses to develop AI models. This fosters innovation from the ground up. This safe space can be extremely helpful for a company developing a system in a field such as healthcare, where the potential is high, but so is the risk. (CSET).

Human Oversight and Accountability: By mandating human oversight of AI systems, particularly in high-risk areas, the Act ensures that AI remains a tool for humans to supplement our judgment and decision-making. This reinforces accountability and reduces the risk of AI errors causing unintended harm (AdaLovelace Institute).

The Worst Aspects of the EU AI Act and Its Likely Detriments to the EU

Despite its strengths, the AI Act presents several challenges that could hinder its effectiveness:

Bureaucratic and Compliance Burdens and Overregulation: For companies operating high-risk AI systems, the administrative and compliance costs of meeting the Act’s stringent requirements are significant. As I mentioned above, smaller companies will likely struggle to comply, potentially stifling innovation and creating barriers to market entry. This concern has been raised by industry experts and legal analysts (Lewis Silkin)(CCN.com). They worry that the extensive testing, documentation, and oversight requirements might deter companies from investing in AI development within Europe, especially as some requirements could be too costly for smaller startups (Techzine Global).

Complexity for StartUps: The complexity of the Act, especially its documentary and risk assessment obligations, poses significant challenges for small and medium-sized enterprises (SMEs). These businesses may incur heavy costs in complying with the Act, such as hiring external consultants to help navigate compliance (CSET)(CCN.com). As a result of increased costs, fewer new companies can be expected to start up, even in beneficial and promising fields such as medical diagnosis and healthcare.

Inflexibility for Rapidly Evolving Technologies: Generative AI models are changing and advancing monthly and even daily. With the lightening speed of advancement, the AI Act may quickly become outdated and less useful. The legislation’s rigid risk categories could hinder the development of cutting-edge AI applications that do not fit neatly into existing categories (CSET).

Impact on Competitiveness: While the Act aims to protect fundamental rights, there is concern that the stringent regulations may put European companies at a competitive disadvantage on the global stage, especially against regions with more lenient AI regulations (Competition Policy International) (CCN.com).

Comparing the EU’s AI Act to the USA’s Lack of Federal AI Regulation

The EU’s approach to AI regulation starkly contrasts with the United States, where no comprehensive federal AI regulation exists. While the U.S. has initiated discussions on AI ethics and guidelines, and President Biden issued two Executive Orders regarding AI (First Executive Order, Second Executive Order), regulation here in the U.S. remains largely decentralized, with states and individual agencies setting rules for specific AI uses (e.g., California’s privacy laws or the FAA’s drone regulations).

Key implications of the U.S. lacking federal AI regulation include:

Flexibility for Innovation: Without strict federal regulations, AI companies in the U.S. have greater freedom to innovate and bring products to market rapidly. This may give U.S. companies a competitive advantage over European companies facing heavy compliance obligations under the AI Act. Not only do companies avoid the expense of having to comply with the regulations, but they also avoid having to worry about maintenance. This might be more of an illusory benefit, however. If AI companies transmit protected data to the EU, sell their products in the EU, or affect EU residents, they are required under the Act to comply with its terms.

Risk of Ethical Lapses: The lack of comprehensive oversight leaves room for ethical violations, such as bias in AI decision-making or the use of AI in surveillance without sufficient accountability. It could also result in the continuation of the use of personally identifiable information and other confidential or sensitive information to train AI models. This could erode public trust in AI, a problem the EU seeks to avoid with its transparent and ethical framework (Ada Lovelace Institute) (Techzine Global).

Global Leadership and Fragmentation: The U.S. risks falling behind in shaping global standards as the EU takes a leadership role in AI governance. If U.S. companies develop AI systems that do not meet European standards, they may face barriers to entering the EU market.

The fragmented regulatory landscape within the U.S. could create confusion for AI companies, especially those operating across state lines (Ada Lovelace Institute) (JURISTnews).

One of my greatest concerns with the lack of a Federal standard is that we will end up with different and sometimes disparate laws. Various states and entities have already passed AI-related laws, and many more are being proposed. This will begin to look like a patchwork quilt of requirements that US companies will need to navigate.

Conclusion

The EU’s AI Act is a lodestar for the U.S. and other countries interested in creating an AI law. It represents a milestone in AI regulation, balancing the need for innovation with public safety, accountability, and ethical considerations. While it positions the EU as a leader in responsible AI governance, it might present challenges, particularly for small companies and cutting-edge AI applications.

Best Practice For Lawyers With AI System Clients

Sources: