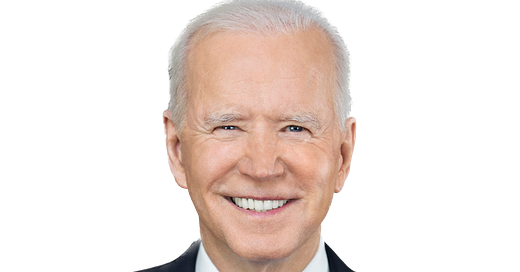

A Bold New Step For Biden With Second AI Executive Order

Biden is set to sign a new AI Executive Order today, and this one is more than just aspirational.

Biden has taken an additional step into AI Governance with his Executive Order set to be signed today, November 20, 2023. His First AI Executive Order issued only weeks ago on October 30, 2023 appeared to be a sweeping move into the uncharted territory of AI governance, but was largely just aspirational, as I wrote about in my article of November 4. Today Biden is taking it a step further with this new Executive Order, which includes standards and enforcement powers.

The First AI Executive Order mentioned that the White House wanted to see more red teaming. Red teaming is the process where AI engineers test AI outputs to verify that they are not discriminatory, biased, or misaligned. Afterall, the biases and accuracy of AI systems and the LLMs that power them are a reflection of the information used to train them and the fine tuning done by engineers. The US government has already been able to obtain voluntary commitments by several companies to conduct red team testing, but the keyword here is voluntary.

With AGI having spread so vastly in such a short amount of time, we will see a number of AI models that have not promised to run red team tests. In addition, there will likely be a wide range of varied opinions on the nature, depth, and accuracy of the red team tests themselves. The major difference with this Executive Order is that the US Government will now set standards, tools and tests for red teaming. This clarification will be essential for companies to understand how they must comply with the government mandates. Companies will be required to notify the government—likely via a new AI governance agency—of the results of their tests. Companies will be required to essentially pass certain tests before being able to turn commercial.

As more companies incorporate AI into their products it makes sense that the Government wants to verify that AI systems will be used in unbiased, nonfraudulent ways. The enforcement power Biden will start to do this with is based largely on the 1950 National Defense Production Act (“DPA”). Originally this Act allowed the President to set wages, ration consumer goods, and set certain prices – all things that made sense during the Korean War, which is why the Act was originally passed. The current version of the DPA was reauthorized in 2019 and is set to expire in 2025. It has fewer powers than the original 1950’s version, but still has teeth. The DPA allows the President, through Executive Order, to direct private companies to prioritize the orders of the federal government. Although it has also routinely been used by the Defense Department for military vehicles and equipment, Biden intends to use it to require private companies such as OpenAI and other large LLM models and AI systems to prioritize red team testing and reporting the results. This is appropriate considering the national security implications of generally-available artificial intelligence.

Although red teaming can be beneficial it will likely also have a chilling effect on AI products and models. Companies producing large language models will have the additional overhead of complying with the testing and verification processes.

Red team testing is expensive and difficult. This type of testing is common in the cybersecurity world with highly specialized services available from a small number of companies. Only the largest LLMs and AI model owners are able afford to conduct their own red team testing at present. The Second AI Executive Order indicates that the federal government will be conducting red teaming tests, or at least reviewing the results of those tests conducted by AI model owners themselves. But the Order recognizes that there are currently not enough engineers and others at the federal government with the knowledge and ability to conduct these tests, or even to review these tests. We will continue to see a huge upswing in AI engineering as a new career path, especially for those with a background in computer science, mathematics, and data science who can quickly jump into the new and evolving world of AI technology.

The Second AI Executive Order also emphasizes the need for a sort of AI “watermark” or “serial number” to allow consumers to identify the products of AI. With nightmare scenarios of AI capabilities being used for everything from impersonating the voices of family members or loved ones in order to extort money, to creating books fraudulently passed off as the work of famous authors, to porn that uses actual people, there is a great deal of harm to be prevented. It is not yet clear how this would work or how it would be enforced.

Although this Executive Order will need to be bolstered by the actions of Congress in passing more laws with standards, enforcement powers and oversight it is a solid start. Biden also announced that he will meet with a bi-partisan group of senators this week. It will be interesting to see how Congress will align to these orders. Senators Hawley and Blumenthal will likely continue to advocate for AI legislation in the Senate.